In this 2-part blog series, I explore a crucial challenge I’m seeing for leaders making bold moves with AI: true success hinges on a robust foundation. AI isn’t just plug-and-play; it’s incredibly compute-heavy and data-hungry. Without the right foundations in place, your AI initiatives could falter. In part 1 of this series, I shared the operational bedrock needed for AI: infrastructure, data, and application architecture. You may read it here

In part 2 of this series, I explore the critical elements that I believe ensure sustained, responsible, and secure AI adoption for any organization.

Strategy – AI for Competitive Advantage

The strategic value proposition of AI and GenAI is multifaceted and must be leveraged for various competitive benefits. A crucial aspect is about recognizing that AI is no longer merely a tool for incremental improvements or cost reductions; it has evolved into a fundamental strategic capability that increasingly determines market leadership and long-term viability. Hence, while leveraging AI and creating use cases, leaders must consider each of the following scenarios.

- Enhanced Efficiency and Lowering Cost of Operations: AI empowers enterprises to deliver higher-quality outputs in shorter timeframes and automates routine, repetitive tasks, thereby freeing employees to focus on higher-value activities. Consider every step in the process life cycle and see which ones are impactful AI use cases to reduce the cost of operations. Such use cases may also improve the speed of the process life cycle significantly. This could be a call center agent automation or billing cycle automation or HR process delivered through an AI agent.

- Improved Decision-Making: As we know, AI provides unparalleled capabilities in data analysis, pattern recognition, and predictive modeling. This enables businesses to make informed, data-driven decisions swiftly and accurately, fundamentally shifting from reactive to proactive strategic approaches. Each business leader should consider creating tools and embedding them in the processes followed so that everyone from leadership to the junior-most employee has access to them and can make better decisions. An example of such decision making could be allocating the right task to the right work force member or ensuring the right number & amount of input is passed to a furnace in a plant to deliver best output.

- Innovation and Competitive Advantage: AI capabilities, when used in design and planning stages of a product or service, can help enterprises to create improved features. AI allows companies to experiment more freely and at a much larger scale, positioning them ahead of competitors. Examples for such innovation could be AI for deciding the spinning cycles in a washing machine or AI Clinical Research and Validations. AI tools can help you design more options for durability and resilience of an aircraft today. Amazon or Walmart warehouse automation delivers improved results and competitive advantage.

- Customer Experience Enhancement: AI personalizes customer interactions, significantly improves customer service. Through recommendation engines and continuous behavioural analysis, AI delivers more relevant and responsive experiences tailored to individual needs. Spotify is one of the latest examples of improved customer experience delivered using AI tools. Most automotive companies are building AI into the interactive cockpit to deliver better customer experience.

Any successful AI/GenAI transformation needs a clear, dominant strategic vision, actively championed by committed leadership. This vision is paramount as it dictates where and how AI investments will yield the most significant business value. AI should not be used only for operational benefits but must be considered across all 4 levers mentioned above.

Governance & Pragmatic Execution

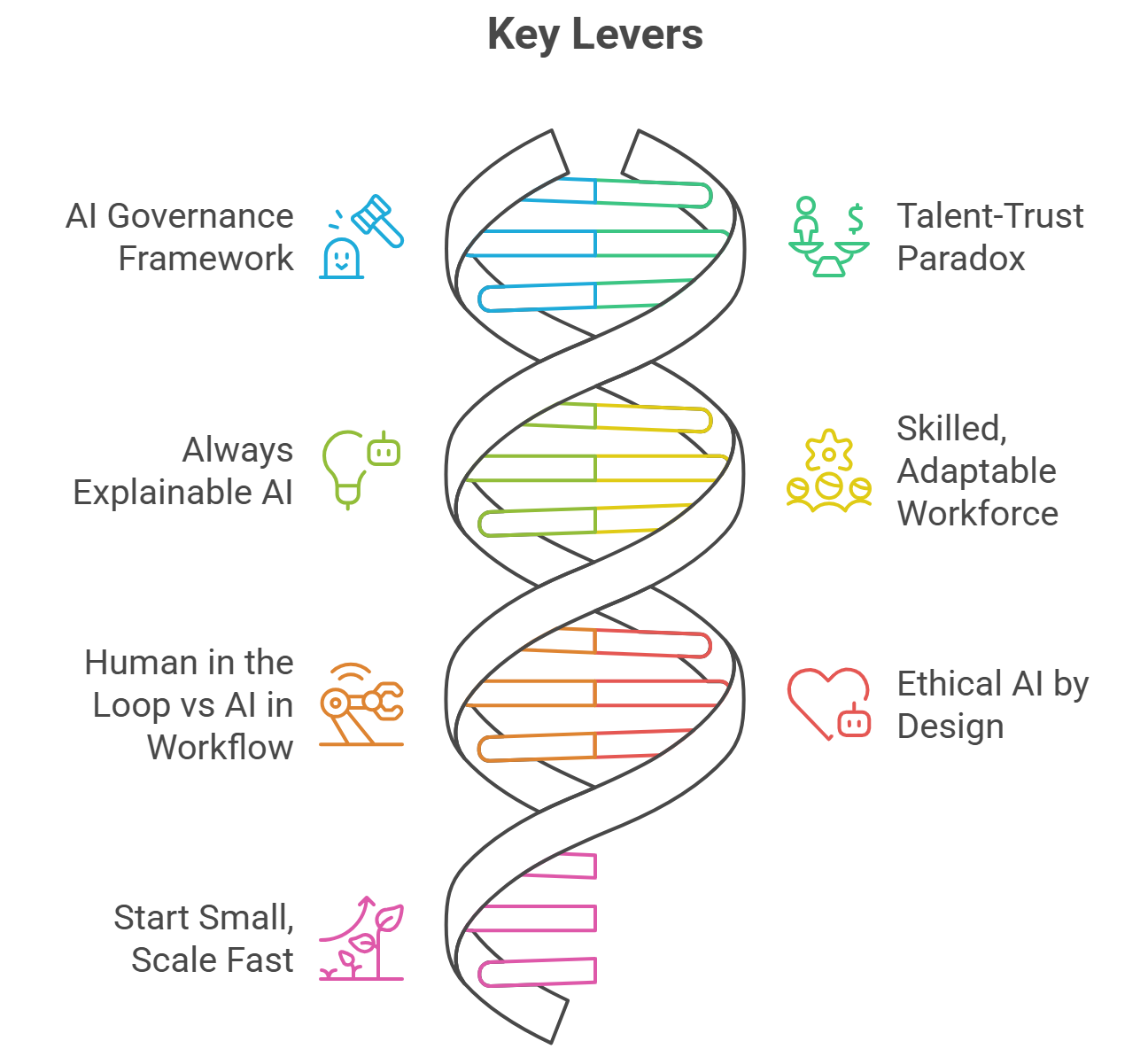

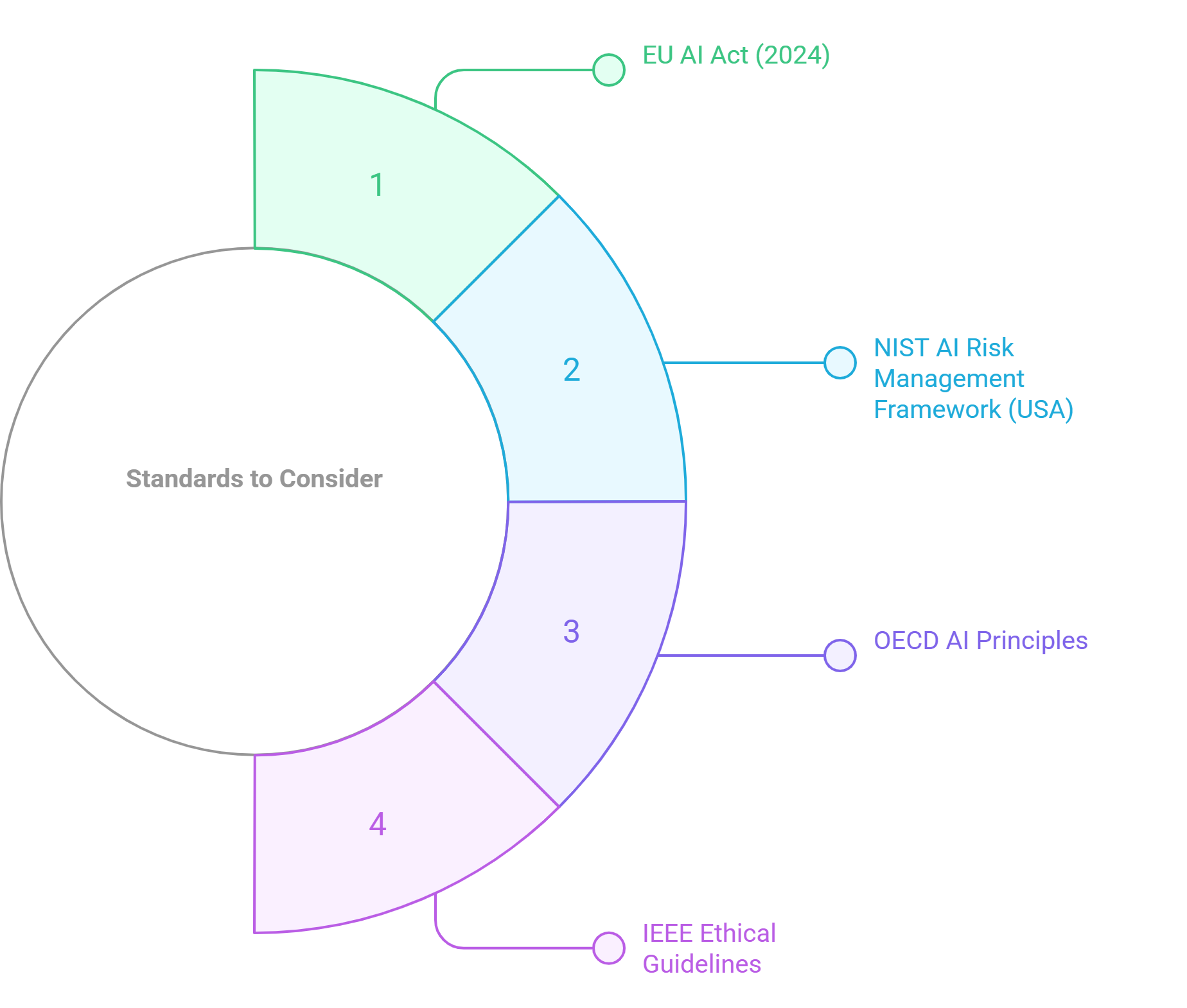

An orderly AI transformation is characterized by its ability to sidestep potential pitfalls and enhance the credibility of the overarching strategy. This involves establishing a robust AI governance framework, a clear transformation roadmap, and dedicated steering and project management committees.

An orderly AI transformation is characterized by its ability to sidestep potential pitfalls and enhance the credibility of the overarching strategy. This involves establishing a robust AI governance framework, a clear transformation roadmap, and dedicated steering and project management committees.

The “talent-trust paradox” highlights a challenge that is not solely about actual skill deficiencies but also about perceived readiness and a lack of mutual trust between leadership and the workforce. Addressing this requires transparent communication, collaborative development of AI initiatives, and leaders actively demonstrating and celebrating AI usage to bridge this perception gap and build mutual confidence.

Even well-designed AI tools may encounter resistance if they are perceived as threatening to established routines or job security. Effective change management strategies are therefore indispensable for ensuring a smooth transition, addressing employee resistance, and strategically positioning AI as an augmentation tool that enhances human capabilities. Transparency and responsible deployment are fundamental to building this trust. This includes adhering to “human-in-the-loop” principles, which ensure human accountability in decision-making processes, particularly for critical applications.

The intrinsic intricacy of GenAI models, often characterized as “black boxes” owing to their deep learning frameworks, poses a substantial technical and governance obstacle. In instances where the rationale underpinning an AI-driven decision is obscure, ensuring impartiality, liability, auditability, and adherence to regulatory stipulations becomes exceptionally difficult. Consequently, governance board must insist on use & execution of “explainable AI (XAI)” methodologies.

Finally, AI transformation, even with the most advanced technology and pristine data, will falter without a workforce that is skilled and adaptable to manage or use the AI applications. Organizations need to identify and cultivate specialized talent for AI projects, including data scientists, machine learning engineers, data engineers, AI architects, prompt engineers, and AI ethicists. AI ethicists represent a critical emerging role, requiring a unique blend of technical knowledge, regulatory understanding, business acumen, and strong communication skills to effectively integrate ethical perspectives into AI system design and deployment. Enterprises must invest in comprehensive training programs to upskill employees, focusing on developing AI-complementary skills that enhance human-AI collaboration. An AI-ready culture is a natural extension of a data-driven culture, where decisions at all levels are guided by analysis and facts, and more importantly, employees are open to trusting insights derived from machines

While “human-in-the-loop” is a critical governance principle, ensuring human oversight and accountability for AI decisions, the re-engineering of processes suggests a more fundamental change towards “AI-in-the-workflow” thinking. Agentic AI is no longer merely a decision support tool but an active participant embedded within workflows. Considering the “AI-in-the-workflow” design principle demands a proactive re-imagination of processes, rather than just a reactive oversight mechanism, fundamentally changing how workflows and how human and AI capabilities are integrated synergistically.

Finally, consistent communication about AI’s potential impact, emphasizing its benefits, helps teams understand the impending changes and adapt more readily to new approaches. Always start small and scale fast based on speed of change management and work force readiness.

Security & Risk Management

When the excitement settles, execution begins. Building an AI ready organization is about designing your organization to run with AI at its core, and having proper security and risk management around it. The integration of AI introduces new technological risks that necessitate robust cybersecurity measures. If not managed properly, AI models can be vulnerable to adversarial attacks or inadvertently expose sensitive data. Security protocols must be intrinsically “baked into” the AI-ready technology stack.

When the excitement settles, execution begins. Building an AI ready organization is about designing your organization to run with AI at its core, and having proper security and risk management around it. The integration of AI introduces new technological risks that necessitate robust cybersecurity measures. If not managed properly, AI models can be vulnerable to adversarial attacks or inadvertently expose sensitive data. Security protocols must be intrinsically “baked into” the AI-ready technology stack.

AI introduces a new spectrum of risks. Governance frameworks must proactively address these through comprehensive risk assessments for AI projects.

- Bias Risk: Poorly trained AI models can reinforce existing biases present in their training data, leading to discriminatory outcomes. This could be coming from data bias, algorithmic bias, human bias, labeling bias, confirmation bias etc. Mitigation strategies include using diverse and representative datasets, employing bias-checking tools during data preprocessing, conducting regular fairness audits, and involving diverse development teams.

- Security Vulnerabilities: AI models are susceptible to various adversarial attacks, such as prompt injection, data poisoning, model theft, and evasion attacks, which can manipulate outputs, compromise sensitive data, or exhaust computational resources. Implementing robust cybersecurity protocols, continuous monitoring, and well-defined incident response plans are essential defences.

- Legal & Intellectual Property (IP) Risks: The copyrightability of AI outputs remains a complex legal area, often requiring sufficient human authorship for protection. There are also risks of unintentional use of copyrighted data, intellectual property leakage, and non-compliance with evolving regulations.

- Operational Risk: Failures in AI processes or systems can lead to significant operational disruptions. Continuous monitoring and auditing of AI systems are crucial to detect model drift, anomalies, and ensure ongoing compliance and performance.

Best practices for AI security include implementing zero-trust security principles, strong encryption protocols, data anonymization techniques, continuous monitoring tools, and well-defined incident response plans. It is crucial to recognize that AI models are susceptible to various adversarial attacks, such as prompt injection, training data poisoning, and evasion attacks, which can manipulate outputs or exhaust computational resources. Defences against such attacks involve rigorous testing, utilizing diverse training data, and deploying AI-powered cybersecurity tools that can detect and respond to sophisticated threats.

At Neurealm, we help enterprises strategically prepare for AI, ensuring they achieve a sustainable AI enterprise faster.

Explore our services here www.neurealm.com

Author

Rajaneesh Kini | Chief Operating Officer | Neurealm

Rajaneesh Kini has 27+ years of experience in IT and Engineering, spanning Healthcare, Communications, Industrial, and Technology Platforms. He excels in leadership, technology, delivery, and operations, building engineering capabilities, delivering solutions, and managing large-scale operations.

In his previous role as President and CTO at Cyient Ltd, Rajaneesh shaped the company’s tech strategy, focusing on Cloud, Data, AI, embedded software, and wireless network engineering. He is a regular speaker in various industry forums especially in the field of AI/ML. Prior to Cyient, he held leadership positions at Wipro and Capgemini Engineering (Aricent/Altran), where he led global ER&D delivery and Product Support & Managed Services Business.

Rajaneesh holds a Bachelor’s degree in Electronics & Medical Engineering from Cochin University and a Master’s in Computers from BITS Pilani. He has also earned a PGDBM from SIBM, a Diploma in Electric Vehicles from IIT Roorkee, and a Diploma in AI & ML from MIT, Boston.

Outside of work, Rajaneesh is a passionate cricket player and avid fan.